The Wrong Fight

The student didn’t cheat. But their essay was flagged as 98% AI-generated. Turnitin said it was likely written by ChatGPT. The instructor reported it. The student appealed. There was no resolution mechanism. They failed the class.

This is the new reality in schools and universities: administrators leaning on unproven detection tools to uphold academic integrity in a world that has fundamentally changed.

It’s not working.

AI detection tools are failing—not just because they falsely accuse honest students, but because they’re also easily fooled by those who know how to cheat well. They create an illusion of control while eroding trust and introducing new forms of inequity.

But even if the tools worked, the bigger problem remains:

Why are we even trying to detect AI use on homework assignments in the first place?

We don’t grade typed essays for their perfect spelling because we know spell check exists. So why are we assigning open-ended take-home writing and pretending AI doesn’t exist? Can we even enforce a “no AI” rule?

This article is not a defense of cheating. It’s a call to rethink how we measure learning, and to stop clinging to assessment models from 2012 that no longer make sense in a world where intelligent tools are ubiquitous, fast-evolving, and impossible to uninvent.

The Two-Sided Failure of AI Detection

AI detection tools fail in two directions:

False Positives: Punishing the Innocent

Students are increasingly being flagged for writing that’s too organized, too generic, or too polished. Detection tools like Turnitin’s AI detector or GPTZero don’t actually “know” if a student used ChatGPT. They use proxies like token patterns, linguistic stylometry, and entropy models. The result? A multilingual student who relies on structured templates can look more “AI” than the student who pasted an actual GPT paragraph and made a few edits.

There’s no appeals process in most institutions. No visibility into how the algorithm works. No ability to replicate or challenge the flag. The student loses.

If your institution is relying on these tools for academic misconduct proceedings—without secondary verification, human review, or a clear policy architecture—you're playing with legal and reputational fire.

False Negatives: Rewarding the Sophisticated

The irony is this: The better you are at using AI dishonestly, the less likely you are to get caught. Students now detox AI writing with paraphrasers like Undetectable.ai or use prompt engineering to generate human-like prose. ‘Humanizer’ tools such as WriteHuman, AI Humanize, and BypassGPT are proliferating. Some paste in their own writing to “train” ChatGPT to mimic their style. Others use a hybrid workflow: write a rough draft, pass it through GPT-4, and revise it into something entirely new.

Detection tools rarely catch this.

Instead, they ensnare the lazy cheater, or the naïve: the ESL student, the kid who used Grammarly, the one who took a writing course and started using topic sentences and transitions.

It’s not just unjust. It’s useless.

As I work with institutional leaders, education companies, and investors navigating these shifts, its obvious that new clarity is needed to make forward-looking decisions about AI use.

Homework Isn’t Sacred

If a student uses AI to organize their ideas, improve grammar, or check for clarity, have they cheated?

This is where most “AI cheating” debates go sideways. The assumption is that homework is some kind of sacred individual artifact. It’s not. It’s a practical tool for reinforcing concepts, practicing skills, and—yes—showing progress. But the idea that homework is a perfect window into a student's unaided capabilities has never been true.

We’ve been here before. When spell check became standard in word processors, grading spelling on typed homework became meaningless. If you really wanted to assess spelling ability, you gave a pop quiz on paper. Or you didn’t bother. Because once a tool becomes universal, the value of excluding it becomes questionable.

Trying to detect AI in homework is just the 2025 version of trying to detect spell check in 1999.

It misses the point.

Real-World Work Is Tool-Augmented

The skills we value in the real world are not about producing unaided output. They’re about using tools well.

No one writes marketing copy without a spell check. No one does research without Google. Lawyers don’t write briefs without templates. Software engineers don’t start from scratch. CEOs don’t write strategy memos in isolation. They gather, revise, delegate, synthesize.

The job of a student in 2025 isn’t to avoid tools. It’s to use them thoughtfully, transparently, and appropriately.

Banning AI from homework doesn't teach that. It punishes it.

Worse, it teaches students the wrong lesson: that using available tools to do your best work is something to hide. That ethical boundaries around AI use are unclear, subjective, and inconsistently enforced. That school is a game of gotchas, not growth.

This isn’t just a pedagogical issue. We’re actively working with EdTech companies and investors to identify the real unmet needs in this evolving landscape. One of the clearest signals we’re seeing? The demand for tools and platforms that don’t just detect AI but help institutions teach with it, and measure learning beyond it. If you’re building or backing what comes next, now’s the time to get it right.

Turnitin and its competitors are going to be worth about the same as spell check detection tools in 1999.

What Are We Even Measuring?

This is the part most institutions haven’t caught up to yet:

If your assignment only works when students don’t use AI, you’re probably assessing the wrong thing.

If you’re grading for “originality,” but you don’t define what counts as acceptable tool use, are you evaluating thinking, or just surveillance evasion?

If you want to know whether a student understands the material, is the take-home essay really the best instrument? Or is it just a holdover from a time when we had fewer tools?

This isn’t a rhetorical provocation. Assessment design is not just pedagogy. It’s institutional strategy. And it needs to evolve.

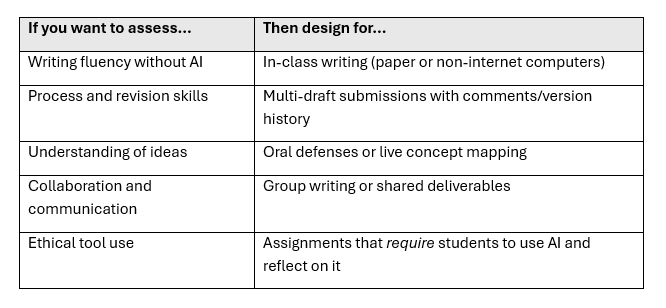

Here’s a better way to think about it:

The goal isn’t to “catch” students. The goal is to prepare them for the real world, and to design assessments that can’t be gamed by AI, or that incorporate AI ethically and transparently.

This is a shift in design philosophy.

I’m often advising clients in education and digital learning on how to realign strategy when a tool, policy, or market norm becomes obsolete. That’s what this is. AI detection isn't just ineffective, it's irrelevant to the deeper job educators and product teams need to be doing.

The real question isn’t how to stop students from using AI. It’s how to build systems that measure what matters.

Smarter Assessment by Design

There’s no need to fear AI in the classroom if you stop designing assignments that collapse the moment a student uses AI.

Assessments going forward will fall into two categories:

- Hard to fake with AI, because they require in-person performance, reflection, or interpersonal dynamics.

- Intentionally incorporate AI, and then assess how well the student used it.

Here are the formats forward-thinking educators and institutions are already adopting:

1. Oral Defenses

In a recent article on higher education “the undergrad experience that matters”, I called for mainstreaming the ‘Oxford Tutorial’ model in the U.S.

Whether or not that happens, an easier solution looks like this: after submitting a project, students answer live questions about their thinking, sources, or decision process. This could be in-person, or it could be via a one-way video interview, where the questions are not known in advance. The technology for doing this at scale already exists.

2. In-Class Writing (Paper or Locked Devices)

Used sparingly to create a benchmark for a student’s natural writing voice and structure.

3. Collaborative Writing and Presentations

AI can’t coordinate human group work (yet). Shared assignments force accountability and engagement.

4. AI-Integrated Assignments

Instead of banning AI, ask students to use it, but show how: “What prompts did you use?”

5. Multi-Draft Submissions with Feedback Logs

Students submit drafts with revision history and brief commentary (“What changed? Why?”)… although this is still stuck in the old mindset of fishing for AI usage.

These formats represent a more durable, more human-centered model of assessment.

For education companies building assessment tools, or institutions trying to adapt existing curricula, this design-first mindset is essential. We’ve worked with both sides: helping EdTech firms define product-market fit in a post-AI learning landscape, and advising institutional leaders on how to operationalize assessment reform at scale. The opportunity isn’t just to “catch up” to AI but to leapfrog toward something better.

What Leaders Should Do Next

For Administrators and Institutional Leaders:

If your current policy on AI use reads like an honor code footnote: “Use of AI is strictly prohibited unless otherwise stated”—you’re fighting a losing battle.

AI use in education isn’t a student discipline issue. It’s a curricular and operational design challenge.

Here’s where to focus:

- Retire blanket bans. They're unenforceable and create uneven application across instructors and departments.

- Build tiered AI policies by assignment type. Think: "AI discouraged," "AI-neutral," "AI required"—each with defined expectations.

- Train faculty not in “how to detect AI,” but in how to redesign assessments.

- Audit existing assignments: Which ones are resilient? Which collapse under tool use?

- Implement lightweight oral checks for high-stakes written work to restore fairness and prevent disputes.

For EdTech Companies and Investors:

The AI detection market is saturated, overhyped, and high-risk. What’s next is not enforcement but enablement.

The real opportunity lies in building products that:

- Help educators design AI-resilient assignments and workflows.

- Provide visibility into the writing and revision process, not just the final result.

- Track and visualize how students use AI, so faculty can assess intention, skill, and ethics—not just output.

- Enable hybrid assessment models: combining peer review, faculty checkpoints, and tool-usage metadata.

Meanwhile, investors should ask:

- Is this product aligned with where pedagogy is actually going, or is it trying to preserve a failing status quo?

- Does it support long-term trust, adoption, and adaptability, or does it create new liabilities?

Don't Fight the Calculator. Change the Test.

Trying to detect AI writing in student work is like trying to detect calculators in math homework.

You can try to ban the tools. You can try to catch their use. Or you can redesign the test.

The future of education won’t be defined by who builds the best AI detector. It will be defined by who builds the best models for assessing learning in a world where AI is a given.

That means:

- Stop pretending AI use can be cleanly separated from student work.

- Stop assigning tasks that only work under conditions of total isolation.

- Stop writing policies you can’t enforce.

And instead:

- Design assessments that focus on thinking, process, and performance.

- Teach students how to use AI well, and why that matters.

- Build systems where trust is earned through transparency, not surveillance.

In the end, it's about measuring what actually matters.